This post is part of a series of blog posts on the Best and Cheapest Oracle APEX hosting: Free Oracle Cloud.

In this post, we will install and use SQLcl and Datapump from the Compute Instance (VM) connecting to our Oracle Database in the Autonomous Transaction Processing (ATP) Cloud.

Although I use most of the time SQL Developer to connect to the database, I find it important to be able to use command-line tools too, as this is what you can automate and it's really fast.

In the previous post, we installed the command line tools of the Oracle Cloud on our own machine, but for the Oracle Tools, I prefer to install them on our Compute Instance in the Cloud. Especially when we want to automate something, it's easier to do this from another machine in the cloud. It also makes it easier to follow as we only have to focus on how to install the Oracle Tools on Linux.

Oracle Instant Client

In order to connect to an Oracle database from a machine, we will use the Oracle Instant Client software. You can download the software for the different operating systems, but as our VM is running Oracle Linux we can install it with just a few commands:

First, update yum so it's smarter where to find Oracle software:

yum install oracle-release-el7

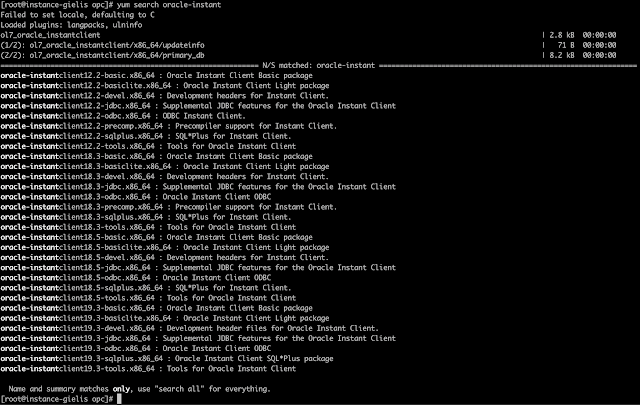

Next, we can search for the Oracle Instant Client version we need:

yum search oracle-instant

yum install oracle-instantclient18.5-tools.x86_64

As a last step we set some environment variables:

export PATH=/usr/lib/oracle/18.5/client64/bin:$PATH

export LD_LIBRARY_PATH=/usr/lib/oracle/18.5/client64/lib

export TNS_ADMIN=/usr/lib/oracle/18.5/client64/lib/network/admin

Before we move on to installing SQLcl, make sure you still have the credentials (wallet) file we used when connecting with SQL Developer to our database. Just like with SQL Developer, we also need this with SQLcl to connect to our database. As a reminder here's the screenshot I'm talking about:

Upload both the SQLcl and Credentials zip file to the Compute Instance (VM):

ssh -i .ssh/oraclecloud opc@132.145.215.55

yum install java

ln -s /opt/sqlcl/bin/sql /usr/lib/oracle/18.5/client64/bin/sql

sql admin@dbdimi_high

There we go... we can connect from our VM to our ATP database.

The next thing we want to do is export the data from our ATP database. We will use Datapump that came with the installation of the tools.

Run the command to export the schema CLOUD:

expdp admin@dbdimi_high \

exclude=index,cluster,indextype,materialized_view,materialized_view_log,materialized_zonemap,db_link \

data_options=group_partition_table_data \

parallel=1 \

schemas=cloud \

dumpfile=export%u.dmp

So where did this export go? To the default DATA_PUMP_DIR directory we don't have direct access to... but to list the files in the directory we can do:

SELECT * FROM DBMS_CLOUD.LIST_FILES('DATA_PUMP_DIR');

Remember my previous blog post about the Object Storage, in which we set up a Backups bucket?

Oracle allows you to connect your Object Storage to your ATP database and that is exactly what we will do further on :)

We will use the same user we created earlier for CLI. In order to connect to ATP we need to set up an Auth Token. Go to the User Details of cliUser and click the Auth Tokens:

Click the Generate Token button:

There's the token... you only see it once, so make sure to copy it:

Next, connect to your ATP database and run the script to add the credentials to the ATP database:

begin

dbms_cloud.create_credential(

credential_name => 'DEF_CRED_NAME'

, username => 'cliUser'

, password => 'Frx}R9lD0O}dIgZRGs{:'

);

end;

/

Now that the DBMS_CLOUD package has credentials, we can do other calls with this package.

To add the Datapump export files to the Object Storage, we can use the PUT_OBJECT procedure.

I created a small script to take all the files from the DATA_PUMP_DIR and put them in the backups Bucket in the Object Storage:

begin

for r in (select object_name, bytes

from dbms_cloud.list_files('DATA_PUMP_DIR'))

loop

dbms_cloud.put_object(credential_name => 'DEF_CRED_NAME',

object_uri => 'https://objectstorage.us-ashburn-1.oraclecloud.com/n/id9u4qbhnjxj/b/backups/o/'||r.object_name,

directory_name => 'DATA_PUMP_DIR',

file_name => r.object_name);

end loop;

end;

/

And when we check our bucket, we see the Datapump export files! Yay!

We also want to export our Oracle APEX apps. In some projects, I use the APEXExport utility, but now we will use SQLcl to export our APEX app 101:

apex export 101

In real life I typically create a few scripts which I can run one-by-one or combined in a general backup script. The script will export the Oracle schemas, the APEX apps and save the files to another location, in our case the Object Storage.

vi make_backup.sh

You can schedule this script with crontab, for example, every day at 2AM:

The above is just an example of what you can do to automate your backups. You have to decide how frequently you want to do those backups.

If you want to move your existing Oracle database and APEX apps to the Oracle Cloud, the steps are similar to above. You upload your Datapump export file to your Object Storage. Next, run the Data Pump Import with the dump file parameter set to the list of file URLs on your Cloud Object Storage and the credential parameter set to the name of the credential you created earlier. For example:

impdp admin/password@dbdimi_high \

directory=data_pump_dir \

credential=def_cred_name \

dumpfile= https://objectstorage.us-ashburn-1.oraclecloud.com/n/adwc/b/adwc_user/o/export%u.dmp \

parallel=1 \

partition_options=merge \

transform=segment_attributes:n \

transform=dwcs_cvt_iots:y transform=constraint_use_default_index:y \

exclude=index,cluster,indextype,materialized_view,materialized_view_log,materialized_zonemap,db_link

8 comments:

Great guide, well written.

One question,for sqlplus or impdp; do we need the wallet file or is there a simpler way with a direct connection string?

Awesome stuff! Much better than the original documentation!

Omar

Hi Dimitri!

Very usefull guide! I'm not familiar with Linux and by the way learn it. All goes well (download to bucket, upload) until I reconnect to VM. Now I can't use CLI:

[root@my-server opc]# /root/bin/oci --help

Traceback (most recent call last):

File "/root/bin/oci", line 5, in

from oci_cli.cli import cli

File "/root/lib/oracle-cli/lib/python2.7/site-packages/oci_cli/__init__.py", line 10, in

from oci import fips

File "/root/lib/oracle-cli/lib/python2.7/site-packages/oci/__init__.py", line 4, in

from . import announcements_service, audit, autoscaling, budget, container_engine, core, database, dns, dts, email, events, file_storage, functions, healthchecks, identity, key_management, limits, load_balancer, monitoring, object_storage, oce, oda, ons, resource_manager, resource_search, streaming, waas, work_requests

File "/root/lib/oracle-cli/lib/python2.7/site-packages/oci/announcements_service/__init__.py", line 7, in

from .announcement_client import AnnouncementClient

File "/root/lib/oracle-cli/lib/python2.7/site-packages/oci/announcements_service/announcement_client.py", line 6, in

from oci._vendor import requests # noqa: F401

File "/root/lib/oracle-cli/lib/python2.7/site-packages/oci/_vendor/__init__.py", line 4, in

from . import chardet # noqa: F401

File "/root/lib/oracle-cli/lib/python2.7/site-packages/oci/_vendor/chardet/__init__.py", line 24, in

from .universaldetector import UniversalDetector

File "/root/lib/oracle-cli/lib/python2.7/site-packages/oci/_vendor/chardet/universaldetector.py", line 17, in

import logging

File "/opt/rh/python27/root/usr/lib64/python2.7/logging/__init__.py", line 26,in

import sys, os, time, cStringIO, traceback, warnings, weakref, collections

File "/opt/rh/python27/root/usr/lib64/python2.7/weakref.py", line 14, in

from _weakref import (

ImportError: cannot import name _remove_dead_weakref

Could you help me?

Greetengs from Poland!

Andrzej

Hello!

Excellent post as usual. Thank you for your efforts!

After trial period code for loading from DATA_PUMP_DIR to backup raises the following error:

Error report -

ORA-20000: Unable to access directory object - ORA-01857: not a valid time zone - DATA_PUMP_DIR

ORA-06512: in "C##CLOUD$SERVICE.DBMS_CLOUD", line 598

ORA-06512: in "C##CLOUD$SERVICE.DBMS_CLOUD", line 1718

ORA-06512: in line 2

ORA-06512: in line 2

20000. 00000 - "%s"

*Cause: The stored procedure 'raise_application_error'

was called which causes this error to be generated.

*Action: Correct the problem as described in the error message or contact

the application administrator or DBA for more information.

Any ideas?

Thank you!

Nik Saridakis

Dimitri,

I am trying to apply your sql connection steps to a an exadata express database from a free VM

Thus installing the basic and tool package (18.3) on the free VM

installing sqlcl (19.4)

ln -s /opt/sqlcl/bin/sql /usr/lib/oracle/18.3/client64/bin/sql

unzip my credentails within /usr/lib/oracle/18.3/client64/lib/network/admin

change the content of sqlnet.ora in

WALLET_LOCATION = (SOURCE = (METHOD = file)

(METHOD_DATA = (DIRECTORY="/usr/lib/oracle/18.3/client64/lib/network/admin")))

SSL_SERVER_DN_MATCH=yes

Adding the env settings to .bash_profile of the opc user

reconnect as opc

and then on the command line:

plato@dbaccess (the named entry within the unzipped tnsnames.ora)

result in

Password? (**********?) *****

USER = plato

URL = jdbc:oracle:thin:@(description= (address=(protocol=tcps)(port=1522)(host=<>)) (connect_data=(service_name=<>)) (security=(ssl_server_cert_dn="CN=<>,O=Oracle Corporation,L=Redwood Shores,ST=California,C=US")) )

Error Message = IO Error: IO Error General SSLEngine problem, Authentication lapse 0 ms.

Username? (RETRYING) ('plato/*********@dbaccess'?)

!!For obvious reasons I have scrambled the host name and service name!!

Any ideas?

Hi Dimitri. Awesome set of Posts.

Im running into an issue when I try and do an expdp.. Ive downloaded the same version of client as you.. Im getting the error below.

[root@instance-20200304-1234 Downloads]# expdp admin@db202003031036_high schemas=vrs dumpfile=export%u.dmp

Export: Release 18.0.0.0.0 - Production on Mon Sep 21 06:44:18 2020

Version 18.5.0.0.0

Copyright (c) 1982, 2018, Oracle and/or its affiliates. All rights reserved.

Password:

Connected to: Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

UDE-31626: operation generated ORACLE error 31626

ORA-31626: job does not exist

ORA-06512: at "SYS.DBMS_SYS_ERROR", line 79

ORA-06512: at "SYS.DBMS_DATAPUMP", line 4749

ORA-04063: package body "SYS.KUPU$UTILITIES" has errors

ORA-06508: PL/SQL: could not find program unit being called: "SYS.KUPU$UTILITIES"

ORA-06512: at "SYS.DBMS_DATAPUMP", line 4453

ORA-06512: at "SYS.DBMS_DATAPUMP", line 6127

ORA-06512: at line 1

This tells me something hasn't been setup or configured correctly on the ATP instance to do an export. Iv asked the question on the cloud forum, but not got any answers. Do you have any ideas?

Many Thanks

Richard

Hi Dimitri. Great blog post. I have everything working up until the IMPDP command When I run it I get the following error:

impdp admin/"mypassword"@dev01_high \

directory=data_pump_dir \

credential=DEF_CRED_NAME \

dumpfile= https://objectstorage.changeme.oraclecloud.com/n/changme/b/backups/o/dev01/2021/01/2021-01-21-04-06/export%u.dmp \

remap_schema=mdsouzaa:mdsouzab \

parallel=1 \

partition_options=merge \

transform=segment_attributes:n \

transform=dwcs_cvt_iots:y transform=constraint_use_default_index:y

Import: Release 19.0.0.0.0 - Production on Thu Jan 21 13:11:36 2021

Version 19.9.0.0.0

Copyright (c) 1982, 2020, Oracle and/or its affiliates. All rights reserved.

Connected to: Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

ORA-39001: invalid argument value

ORA-39000: bad dump file specification

ORA-31640: unable to open dump file "/u03/dbfs/B683.../data/dpdump/expdat.dmp" for read

ORA-27037: unable to obtain file status

Linux-x86_64 Error: 2: No such file or directory

Additional information: 7

This kind of makes sense since my file is named "export01.dmp". I've tried explicitly listing the file as "export01.dmp" but still get the same error. Seems that it's looking for "expdat.dmp" Any ideas on how to resolve?

A couple of points really:

Yes I can create a backup file, but I don't seem to be able to use it. If I change the bucket specification to /files/ name for my bucket from /backup/ it seesm to work.

I also had some issues around bad dump file spec, so I would include the version = parameter in the import and export commands.

Paul

Post a Comment