First, if you never heard of APEX Office Print (AOP), or in short, AOP, it's the leading document generation software for Oracle APEX. AOP allows you to create PDF, Word, Excel, PowerPoint, Text, and HTML documents with data from your database and your Oracle APEX app. AOP is unique as it's tightly integrated with Oracle APEX. You create a template in Word, Excel, PowerPoint, Text, or HTML, and tags that are included in your template will be replaced by data. In the tweet, Matic speaks about the {&interactive} tag. When you add this tag to your Word, Excel, or HTML document, AOP generates the Interactive Report in your document. AOP is even so smart that it will recognize if you have filters, highlights, groupings, etc., defined!

So I thought to create an example and see what it would take to get the Saved Report name in the document.

I went into the AOP Sample Application and clicked on the example where there are Saved Reports for an Interactive Report. The way I understand the question is how to use the name of the saved report, in our case, "3. Highlight", in the export to Excel or PDF.

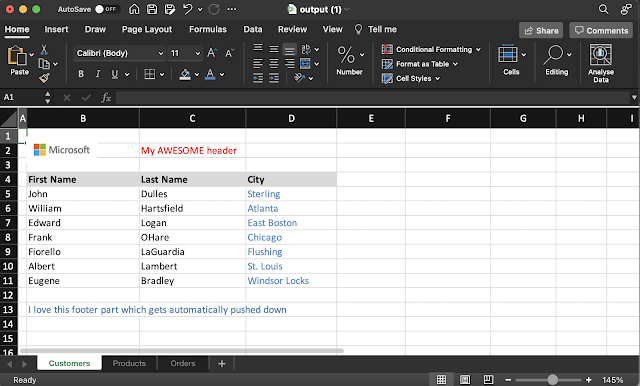

The result when you export or download to Excel should be like this:

Here are the steps to get it done.

1. Create a hidden item on the page. We will use this item to store the name of the saved report.

2. Create a dynamic action on click of the Excel button and add a Set Value Action with the following JavaScript Expression:

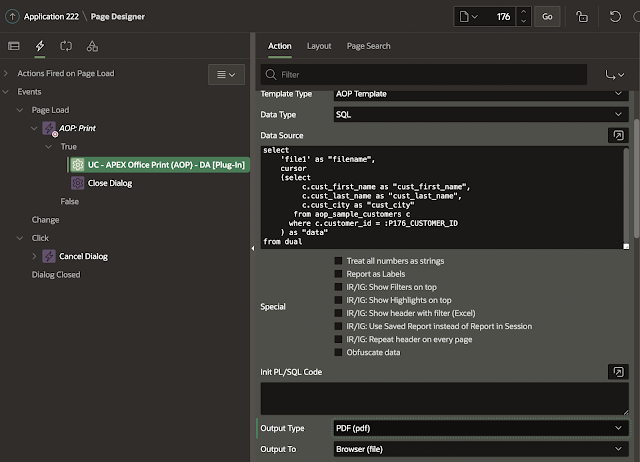

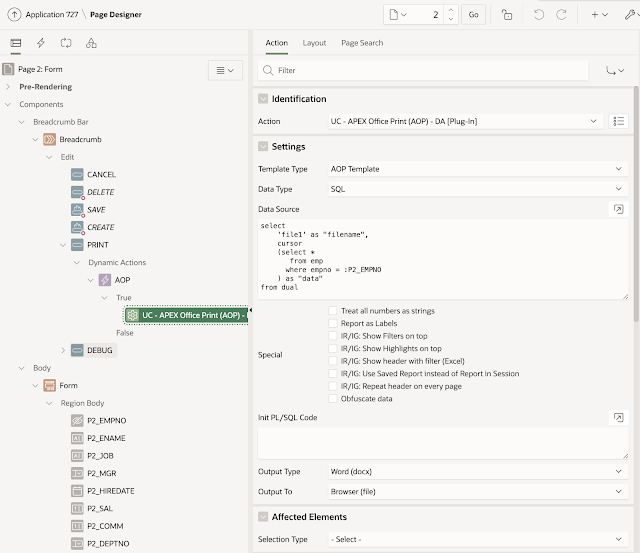

3. Add the APEX Office Print Dynamic Action plug-in as another Action. We tell AOP we stored our template in Static Application Files with the name "aop_IR_template_saved_report.xlsx". Our data comes from the IR, so we define the static Id of the IR region "ireport1". As output, we took Excel, but you can also take another output like PDF, for example. It's important to add the hidden item in the "Affected Elements" section, as AOP will get the values of those items too.

4. And finally, this is what our template looks like.

The hidden item is in the ireport region. You can reference the item with the following tag:

{ireport_items[0].P5061_SAVED_REPORT_TITLE}. An alternative, in case you have multiple items you want to reference, you can use {#ireport_items} ... define all your items with {tag} ... {/ireport_items}

And to get the Interactive Report, you define in a cell of your choice the tag {&interactive}.

In case you submit multiple IRs, or a combination of Classic Reports, IGs, and IRs, it would be {&interactive_1} {&interactive_2} {&classic1&} {&ig1&} etc.

And that is it!

I'm always amazed by how far we have brought AOP in the last 7 years. We have never been more committed to providing solutions to all of the reporting, exporting, and document generation needs you have ... and there is more to come 😁